MMDetection

Tutorials

Google Colaboratory - Image and Video with Faster R-CNN on Version 3.3.0

https://colab.research.google.com/drive/11pbaSUnA8eWopz2-OHh9XkCiTQdbMl8t

Google Colaboratory - MS-COCO Training with Faster R-CNN on Version 3.3.0

https://colab.research.google.com/drive/1tYssvTbCa0zuICyeVShUx42PGMeJxWlW

Google Colaboratory - Image and Video with Faster R-CNN/YOLOX/DETR/Mask R-CNN/Panoptic FPN/Mask2Former on Version 2.28.2

https://colab.research.google.com/drive/1hbOLBwOBeCVOELl3-I2yXD0QTmWKVylZ

Getting Started

https://mmdetection.readthedocs.io/en/latest/get_started.html

https://qiita.com/apiss/items/48475abc20abf26c0d27

https://qiita.com/fam_taro/items/7f028dfeae2a79a10fe1

Easier Preparation on Google Colab

The official approach is using OpenMIM and the latest repositories on GitHub but the following steps simply work on Google Colab:

!pip install mmengine !pip install mmcv==2.1.0 !pip install mmdet

Tricks and Traps for Version 3

## 01. MMEngine is recommended to be installed mim install mmengine

## 02. show_result_pyplot and model.show_result() have been removed (see below for alternative approaches) #from mmdet.apis import show_result_pyplot

## 03. Register all modules from mmdet.utils import register_all_modules register_all_modules()

## 04. Some configuration file names have been changed #mim download mmdet --config faster_rcnn_r50_fpn_1x_coco --dest $config_dir mim download mmdet --config faster-rcnn_r50_fpn_1x_coco --dest $config_dir

Information - Useful Tools

Information - Loading Output Pickle (results.pkl)

import mmengine

results = mmengine.load('results.pkl')

print(results[0]['pred_instances']['labels']) ## tensor([0, 0, 0])

print(results[0]['pred_instances']['bboxes']) ## tensor([[123.4567, 234.5678, 345.6789])

print(results[0]['pred_instances']['scores']) ## tensor([0.9876, 0.8765, 0.7654])

Information - Evaluating mean Average Precision/Recall (mAP/mAR) with Specific IoU

## Output Test Results in COCO Format python mmdetection/tools/test.py $config_file $checkpoint_file --cfg-options test_evaluator.outfile_prefix=$output_dir/results

import numpy as np from pycocotools.coco import COCO from pycocotools.cocoeval import COCOeval

coco_gt = COCO('instances.json')

coco_dt = coco_gt.loadRes('results.bbox.json')

## Default Evaluation with IoU = 50:95% coco_eval = COCOeval(coco_gt, coco_dt, iouType='bbox') #coco_eval.params.iouThrs = np.linspace(0.5, 0.95, int(np.round((0.95 - 0.5) / 0.05)) + 1, endpoint=True) #coco_eval.params.iouThrs = np.array([0.5, 0.55, 0.6, 0.65, 0.7, 0.75, 0.8, 0.85, 0.9, 0.95]) coco_eval.evaluate() coco_eval.accumulate() coco_eval.summarize() #stats = coco_eval.stats

## Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.800 ## Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 1.000 ## Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.000 ## Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000 ## Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.800 ## Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = -1.000 ## Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.800 ## Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.900 ## Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.900 ## Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000 ## Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.900 ## Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = -1.000

## Evaluation with IoU = 50% coco_eval = COCOeval(coco_gt, coco_dt, iouType='bbox') coco_eval.params.iouThrs = np.array([0.5]) coco_eval.evaluate() coco_eval.accumulate() coco_eval.summarize() #stats = coco_eval.stats

## Average Precision (AP) @[ IoU=0.50:0.50 | area= all | maxDets=100 ] = 1.000 ## Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 1.000 ## Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = -1.000 ## Average Precision (AP) @[ IoU=0.50:0.50 | area= small | maxDets=100 ] = -1.000 ## Average Precision (AP) @[ IoU=0.50:0.50 | area=medium | maxDets=100 ] = 1.000 ## Average Precision (AP) @[ IoU=0.50:0.50 | area= large | maxDets=100 ] = -1.000 ## Average Recall (AR) @[ IoU=0.50:0.50 | area= all | maxDets= 1 ] = 1.000 ## Average Recall (AR) @[ IoU=0.50:0.50 | area= all | maxDets= 10 ] = 1.000 ## Average Recall (AR) @[ IoU=0.50:0.50 | area= all | maxDets=100 ] = 1.000 ## Average Recall (AR) @[ IoU=0.50:0.50 | area= small | maxDets=100 ] = -1.000 ## Average Recall (AR) @[ IoU=0.50:0.50 | area=medium | maxDets=100 ] = 1.000 ## Average Recall (AR) @[ IoU=0.50:0.50 | area= large | maxDets=100 ] = -1.000

- A score of -1.000 means 'not applicable'

- coco_eval.params.iouThrs should be not list but np.array to show the correct values

Information - Change Thresholds

model = init_detector(config_file, checkpoint_file, device=device) test_cfg = model.test_cfg

## Example: Lower the Thresholds of Faster R-CNN test_cfg.rcnn.score_thr = 0.01 test_cfg.rcnn.nms.iou_threshold = 1.0 test_cfg.rcnn.max_per_img = 20000 test_cfg.rpn.nms_pre = 20000 test_cfg.rpn.max_per_img = 20000 test_cfg.rpn.nms.iou_threshold = 1.0

Troubleshooting - MMCV Compatibility on Google Colab

https://github.com/open-mmlab/mmcv/issues/3059

## Downgrading PyTorch on Google Colab (2.3.0+cu121) for MMCV Compatibility (Confirmed 2024/07/04) !pip install torch==2.1.0 torchvision==0.16.0 torchaudio==2.1.0 --index-url https://download.pytorch.org/whl/cu121 !pip install --upgrade openmim !mim install 'mmcv==2.1.0'

Troubleshooting - Conflict with Jedi (on Google Colab)

## Resolving the dependency conflicts for openmim: ipython 7.9.0 requires jedi>=0.10 !pip install --upgrade jedi

Troubleshooting - SSL Cert Verification Error

## processing faster-rcnn_r50_fpn_1x_coco... ## Traceback (most recent call last): ## ... ## File "/usr/lib/python3.10/ssl.py", line 1342, in do_handshake ## self._sslobj.do_handshake() ## ssl.SSLCertVerificationError: [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: certificate has expired (_ssl.c:1007) ## During handling of the above exception, another exception occurred: ## Traceback (most recent call last): ## ... ## File "/usr/lib/python3.10/site-packages/urllib3/util/retry.py", line 594, in increment ## raise MaxRetryError(_pool, url, error or ResponseError(cause)) ## urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='download.openmmlab.com', port=443): Max retries exceeded with url: /mmdetection/... ## (Caused by SSLError(SSLCertVerificationError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: certificate has expired (_ssl.c:1007)')))

To be investigated…

Troubleshooting - Visualizer Warning

https://mmengine.readthedocs.io/en/v0.10.4/_modules/mmengine/visualization/visualizer.html

## visualizer.py:196: UserWarning: ## Failed to add <class 'mmengine.visualization.vis_backend.LocalVisBackend'>, please provide the `save_dir` argument. model.cfg.visualizer.vis_backends[0]['save_dir'] = output_dir

Troubleshooting - Visualizer Warning

## mmengine/utils/manager.py:113: UserWarning: ## <class 'mmdet.visualization.local_visualizer.DetLocalVisualizer'> instance named of visualizer has been created, the method `get_instance` should not accept any other arguments

To be investigated; no actual problem was recognized.

Troubleshooting - model.show_result() was removed after Version 2

https://github.com/open-mmlab/mmdetection/issues/10380

- MMEngine Visualizer is recommended to be used instead of show_result()

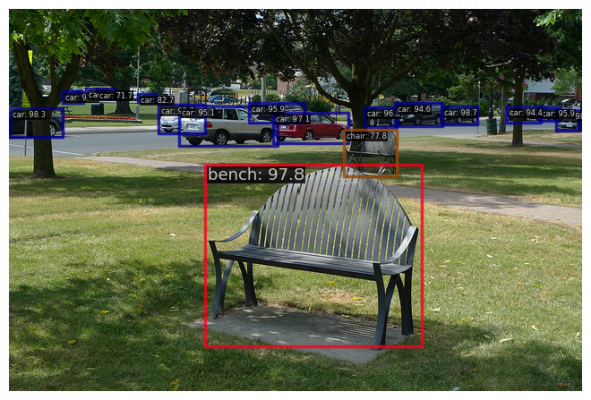

- Option A is the official approach, but font sizes might be too small if on a large image

- Option B is a tailor-made approach for visualization with annotations

## Loading Image import mmcv image = mmcv.imread(img, channel_order='rgb') #image = mmcv.imconvert(image, 'bgr', 'rgb')

## Model and Result model = init_detector(config_file, checkpoint_file, device=device) result = inference_detector(model, img)

## Option A

from mmdet.registry import VISUALIZERS

visualizer = VISUALIZERS.build(model.cfg.visualizer)

visualizer.dataset_meta = model.dataset_meta

visualizer.add_datasample('result', image, data_sample=result, draw_gt=False, show=True, wait_time=0, pred_score_thr=0.70, out_file='result.jpg')

visualizer.get_image()

visualizer.show()

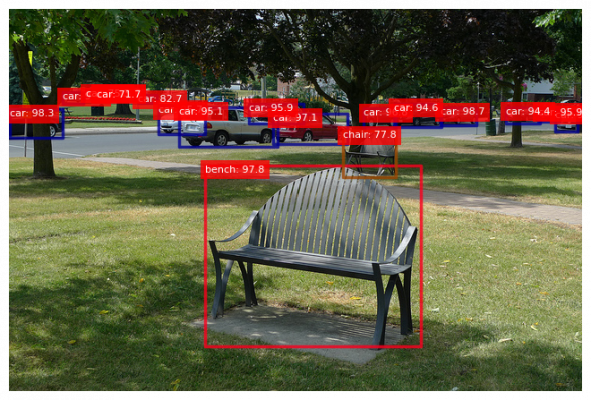

## Option B

import torch

import cv2

from mmengine.visualization import Visualizer

pred_score_thr = 0.70

scores = result.pred_instances.scores[torch.where(result.pred_instances.scores>=pred_score_thr)]

labels = result.pred_instances.labels[torch.where(result.pred_instances.scores>=pred_score_thr)]

bboxes = result.pred_instances.bboxes[torch.where(result.pred_instances.scores>=pred_score_thr)]

labels_classes = list(map(lambda x: model.dataset_meta['classes'][x], labels.tolist()))

labels_palette = list(map(lambda x: model.dataset_meta['palette'][x], labels.tolist()))

labels_print = list(map(lambda x, y: f'{x}: {(y * 100.0):.1f}', labels_classes, scores.tolist()))

bboxes_origins = list(map(lambda x: [x[0], x[1]], bboxes.tolist()))

visualizer = Visualizer(image=image)

visualizer.draw_bboxes(bboxes, edge_colors=labels_palette, line_widths=3)

visualizer.draw_texts(labels_print, torch.tensor(bboxes_origins), font_sizes=30, colors='white', bboxes=dict(facecolor='red', edgecolor='black', linewidth=0, alpha=0.8))

visualizer.get_image()

visualizer.show()

cv2.imwrite('result.jpg', cv2.cvtColor(visualizer.get_image(), cv2.COLOR_RGB2BGR))

Troubleshooting - List Index Out of Range

https://github.com/open-mmlab/mmdetection/issues/8529

## Traceback (most recent call last): ## File "mmdetection/tools/test.py", line 149, in <module> ## main() ## File "mmdetection/tools/test.py", line 145, in main ## runner.test() ## ... ## File "mmdetection/mmdet/evaluation/metrics/coco_metric.py", line 244, in results2json ## data['category_id'] = self.cat_ids[label] ## IndexError: list index out of range

Check that self.cat_ids contains all expected category IDs with no missing values (e.g., [1, 2, 3, 5, 6, 7, 8, 9, 10]).

Training with Custom Dataset

https://mmdetection.readthedocs.io/en/latest/user_guides/dataset_prepare.html

https://mmdetection.readthedocs.io/en/latest/user_guides/train.html

https://mmdetection.readthedocs.io/en/v2.2.1/tutorials/new_dataset.html

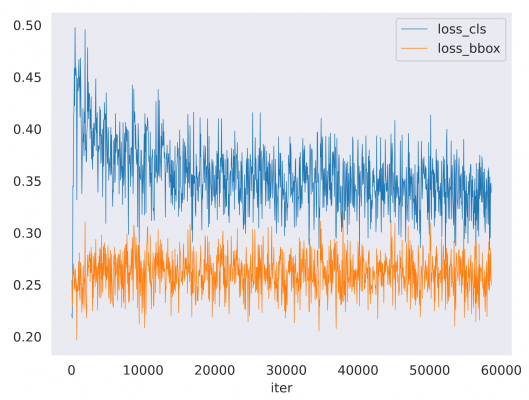

Information - Loss Functions

Information - Optimizers

https://pytorch.org/docs/stable/optim.html

optimizer=dict(_delete_=True) removes originally-defined parameters in dict()

Information - Freeze Parameters

https://github.com/open-mmlab/mmdetection/blob/main/mmdet/models/backbones/resnet.py

- frozen_stages = -1 : not freezing any parameters

- frozen_stages = 0 : freezing the stem part only

- frozen_stages = n [n >= 1] : freezing the stem part and first n stages

Troubleshooting - loss=0 and acc=100

https://github.com/open-mmlab/mmdetection/issues/2744

https://mmdetection.readthedocs.io/en/3.x/user_guides/useful_tools.html

mmengine - INFO - Epoch(train) [1][ 50/300] lr: 1.9820e-03 loss: 0.1679 loss_rpn_cls: 0.0009 loss_rpn_bbox: 0.0000 loss_cls: 0.1669 acc: 100.0000 loss_bbox: 0.0000 mmengine - INFO - Epoch(train) [1][100/300] lr: 3.9840e-03 loss: 0.0000 loss_rpn_cls: 0.0000 loss_rpn_bbox: 0.0000 loss_cls: 0.0000 acc: 100.0000 loss_bbox: 0.0000 mmengine - INFO - Epoch(train) [1][150/300] lr: 5.9860e-03 loss: 0.0000 loss_rpn_cls: 0.0000 loss_rpn_bbox: 0.0000 loss_cls: 0.0000 acc: 100.0000 loss_bbox: 0.0000 mmengine - INFO - Epoch(train) [1][200/300] lr: 7.9880e-03 loss: 0.0000 loss_rpn_cls: 0.0000 loss_rpn_bbox: 0.0000 loss_cls: 0.0000 acc: 100.0000 loss_bbox: 0.0000 mmengine - INFO - Epoch(train) [1][250/300] lr: 9.9900e-03 loss: 0.0000 loss_rpn_cls: 0.0000 loss_rpn_bbox: 0.0000 loss_cls: 0.0000 acc: 100.0000 loss_bbox: 0.0000 mmengine - INFO - Epoch(train) [1][300/300] lr: 1.1992e-02 loss: 0.0000 loss_rpn_cls: 0.0000 loss_rpn_bbox: 0.0000 loss_cls: 0.0000 acc: 100.0000 loss_bbox: 0.0000 mmengine - INFO - Exp name: faster-rcnn_r50_fpn_1x_custom mmengine - INFO - Saving checkpoint at 1 epochs mmengine - INFO - Epoch(val) [1][ 50/100] ... mmengine - INFO - Epoch(val) [1][100/100] ... mmengine - INFO - Evaluating bbox... Loading and preparing results... mmengine - ERROR - coco_metric.py - compute_metrics - 465 - The testing results of the whole dataset is empty. mmengine - INFO - Epoch(val) [1][100/100] ... mmengine - WARNING - Since `metrics` is an empty dict, the behavior to save the best checkpoint will be skipped in this evaluation.

Case 01:

- Annotations likely have not been loaded correctly in this case: loss_bbox = 0.0000

- Triple-check the dataset and its format at the beginning

- Using browse_dataset.py is helpful for verifying whether annotations align with the data

- Check whether the image{“width”: <param>, “height”: <param>} fields in the COCO annotations match the actual image width and height

Case 02:

- An error occurs if a specific class does not appear in the validation dataset

- Set val_cfg to be None (or train_cfg.val_interval to be larger than max_epochs) to avoid the error by skipping the validation process

#train_cfg = dict(max_epochs=12, type='EpochBasedTrainLoop', val_interval=999) val_cfg = None val_dataloader = None val_evaluator = None

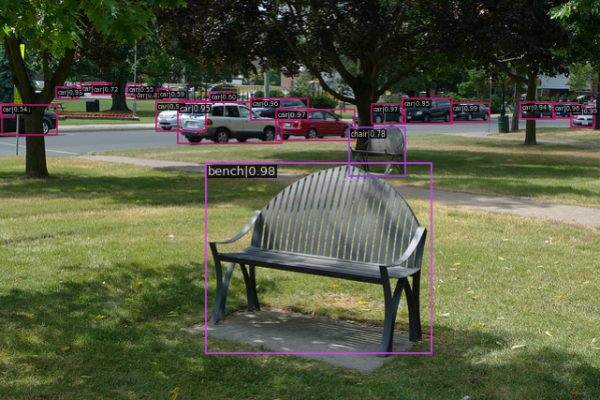

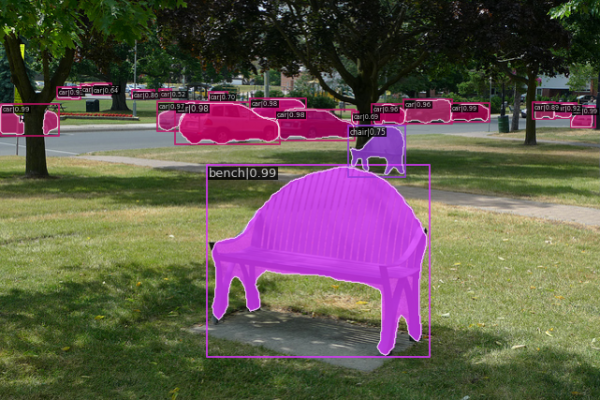

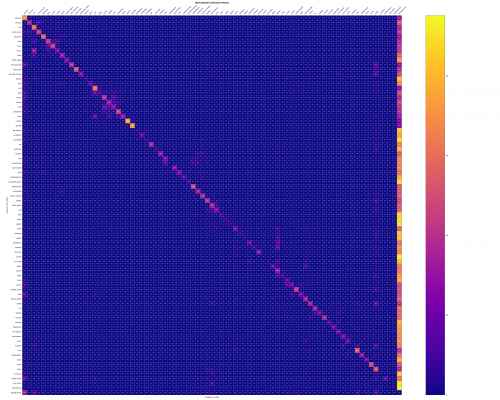

Results

Faster R-CNN

Mask R-CNN

Mask2Former

MS-COCO Training - to be continued...

Results (Video)

References

https://github.com/open-mmlab/mmdetection/blob/master/docs/en/get_started.md/

https://mmdetection.readthedocs.io/en/latest/get_started.html

https://mmdetection.readthedocs.io/en/latest/1_exist_data_model.html

https://dev.classmethod.jp/articles/mmdetection-detect-samples/

https://github.com/open-mmlab/mmcv/issues/3059

https://github.com/open-mmlab/mmdetection/issues/10380

https://qiita.com/apiss/items/48475abc20abf26c0d27

https://qiita.com/fam_taro/items/7f028dfeae2a79a10fe1

https://qiita.com/saliton/items/24b69d1fa274b21c489a

https://qiita.com/sister_DB/items/42560f551b65dd976217

https://qiita.com/tachyon777/items/ed33f18cd4c651539ead

Acknowledgments

Daiphys is a professional services company in research and development of leading-edge technologies in science and engineering.

Get started accelerating your business through our deep expertise in R&D with AI, quantum computing, and space development; please get in touch with Daiphys today!

Daiphys Technologies LLC - https://www.daiphys.com/